- Mail:

- info@digital4pro.com

DevOps: From virtual machines to containers

DevOps: Dalle macchine virtuali ai container

14 Luglio 2020

Tre domande sul lavoro post digitale

15 Luglio 2020One of the reasons for DevOps’1 luck is the focus on resolving the conflict between the development (Dev) and the management (Ops) of information systems. The Dev is responsible for promptly meeting the business requirements, while the Ops is responsible for keeping the systems stable and available. The maturity of an architecture compared to the state of the art is due precisely to the guarantee offered in combining these two needs, that is, the speed with which it is possible to introduce changes and the stability offered by the system.

Each running application is composed of the source code written by the developers with the addition of other elements necessary to make it work. These can be static or dynamic libraries (database modules, APIs, drivers, mathematical functions), binary files for code execution (language interpreters, execution environments, scripts, application servers) and configurations.

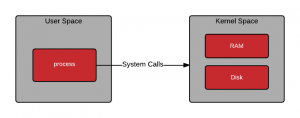

In addition to these components, there may be other files such as static resources (translations, images, precompiled and optimized data). This set of components is called the execution environment and is located in the user-space of the system.

The execution environment and the application also need an operating system, which in turn is preconfigured by the systems engineers to operate on a particular infrastructure and can include specific components in kernel-space.

Figura 1 – User Space e Kernel Space [Fonte: Red Hat]

Virtual machines

Virtual machines2 have long been an efficient technique for managing operating systems and making applications independent of hardware. Their management has traditionally been put in charge of systems engineers. A virtual machine (VM) offers the user the same user interface as a real server, complete with its hardware components, without actually monopolizing a hardware. Thanks to the VMs it is possible to install a system with its configuration on a single server machine, making it independent from the others running on it. A host server can manage a large number of virtual machines depending on the resources available.

The operation of a VM is linked to the installation of a virtualization software called hypervisor on the infrastructure or in the operating system. The hypervisor takes care of management (i.e. creation, modification, cancellation, replication, backup …) and maintenance of the VM in operation. The hypervisor distributes the available infrastructure resources among the different machines, according to the assignment priority indicated a priori by the operator.

The VMs isolate the services from the underlying hardware system, fully reproducing the execution environment in the operating system installed inside it. The operating system, in the presence of a VM, manages the resources between the applications present in its user-space.

If we find ourselves having to distribute a service on a large scale, or more simply on different types of infrastructure (cloud, workstations, physical servers) it is necessary to create an entire copy of the execution environment. When one or more services installed on the same machine make use of the same components (libraries, binaries, files or parts of the kernel-space), we obtain a duplication of the components themselves since it is necessary to repeat the allocation of these resources in each virtual machine. Once the fixed resources of a virtual machine are exhausted, it is necessary to create a new one or stop its execution to increase the resources assigned to the operating system. To replicate a virtual machine you need to make a complete copy of all this structure.

![Figure 2 - Virtual Machines and Containers [Source: Google]](https://www.digital4pro.com/wp-content/uploads/2020/07/01-300x116.png)

Figure 2 – Virtual Machines and Containers [Source: Google]

Containers

The container technique deals with providing a more efficient and sophisticated virtualization system, compared to VMs, creating a single level of virtual abstraction called a layer for each individual element required by the application.

The assonance of the term with the world of freight transport is not accidental: it derives from the idea of finding the standard form for a unit suitable for the transport of goods of different nature. Regardless of the carrier and the infrastructure used (ships, trains, road trains …) it is possible to follow a common interface standard for hooking these units. The software containers are composed of a stratification of levels, allocated in the infrastructure resources a single time.

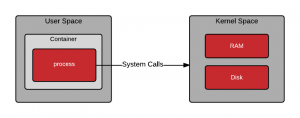

Transporting, starting and managing a container unit is a much simpler and faster operation than possible with a VM. The container preserves most of the isolation features offered by a virtual machine, but is run in user-space. It is therefore a normal application. The kernel-space used by containers is no longer replicated by the hypervisor, but offered by the virtualization system, called the container engine.

The container eliminates the need to distribute intermediate packages (such as installation files) and includes the definition of the environment for application operation with infrastructure-as-code and configuration-as-code techniques.

This package allows you to transport modular services from one cloud to another and is quick to release. It also speeds up all the provisioning of the configurations and eliminates the manual configuration operations carried out by system engineers, a responsibility now shared with the developers, which becomes more intense and flexible throughout the development process.

Figura 3 – User Space e Kernel Space con l’utilizzo dei container [Fonte: Red Hat]

Instead of virtualizing the hardware stack, as in the case of virtual machines, containers perform virtualization at the operating system level. In this way, several containers can be run directly on the operating system kernel. This means that containers are much lighter, share the operating system kernel, boot much faster, and use a fraction of the memory than an entire operating system requires.

Containers allow you to create environments isolated from other applications and are designed to work practically anywhere, greatly facilitating development and deployment on Linux, Windows and Mac operating systems, on virtual or bare metal machines, on a developer’s machine or in a data center on-premises and, of course, in the cloud.

Containers virtualize resources in terms of CPU, memory, storage and network at the operating system level, giving developers a view of the operating system logically isolated from other applications.

From monolithic to service-based architecture

Containers work best in service-based architectures. Contrary to monolithic architectures, where each part of the application is intertwined with the others, service-based architectures separate products into distinct components. Separation and division of operations allow services to remain in execution even if some operations are interrupted, increasing the reliability of the application as a whole.

The division into services also allows you to develop faster and more reliably. Smaller components are easier to manage, and because the services are separate, it is easier to test specific input outputs.

Containers are perfect for service-based applications, as you can perform an integrity check on each container, limit each service to specific resources, and start and stop them independently of each other.

Because the containers abstract from the code, they allow you to treat separate services like black boxes, further reducing the space that the developer has to manage. When developers work on services that depend on other services, they can easily start a container for that specific service, without having to configure the correct environment and troubleshoot in advance.

1 DevOps is a set of practices that combines software development and IT operations. It aims to shorten the system development lifecycle and provide continuous deliveries with high quality software. DevOps is complementary to Agile software development, in fact different aspects of DevOps are derived from the Agile methodology.

2 In computer science the term virtual machine (Virtual Machine or VM) indicates a software that, through a virtual environment, emulates the behavior of a physical machine (be it a PC or a server) thanks to the allocation of hardware resources (portions of Hard Disk , RAM and CPU).

3 The on-premises software, as opposed to the software as a service (SaaS), provides for the installation and execution of the software directly on local machines.

Bibliography:

- https://azure.microsoft.com/it-it/free/kubernetes-service/

- https://cloud.google.com/containers

- https://cloud.google.com/kubernetes

- https://aws.amazon.com/it/containers/

- DevOps Guide to integrate Development and Operations and produce quality software, Fabio Mora

- https://docs.docker.com

- Clean architecture. Guida per diventare abili progettisti di architetture software, 2018, Robert C. Martin

- Clean Code: Guida per diventare bravi artigiani nello sviluppo agile di software, Robert C. Martin